Generative AI, Explained

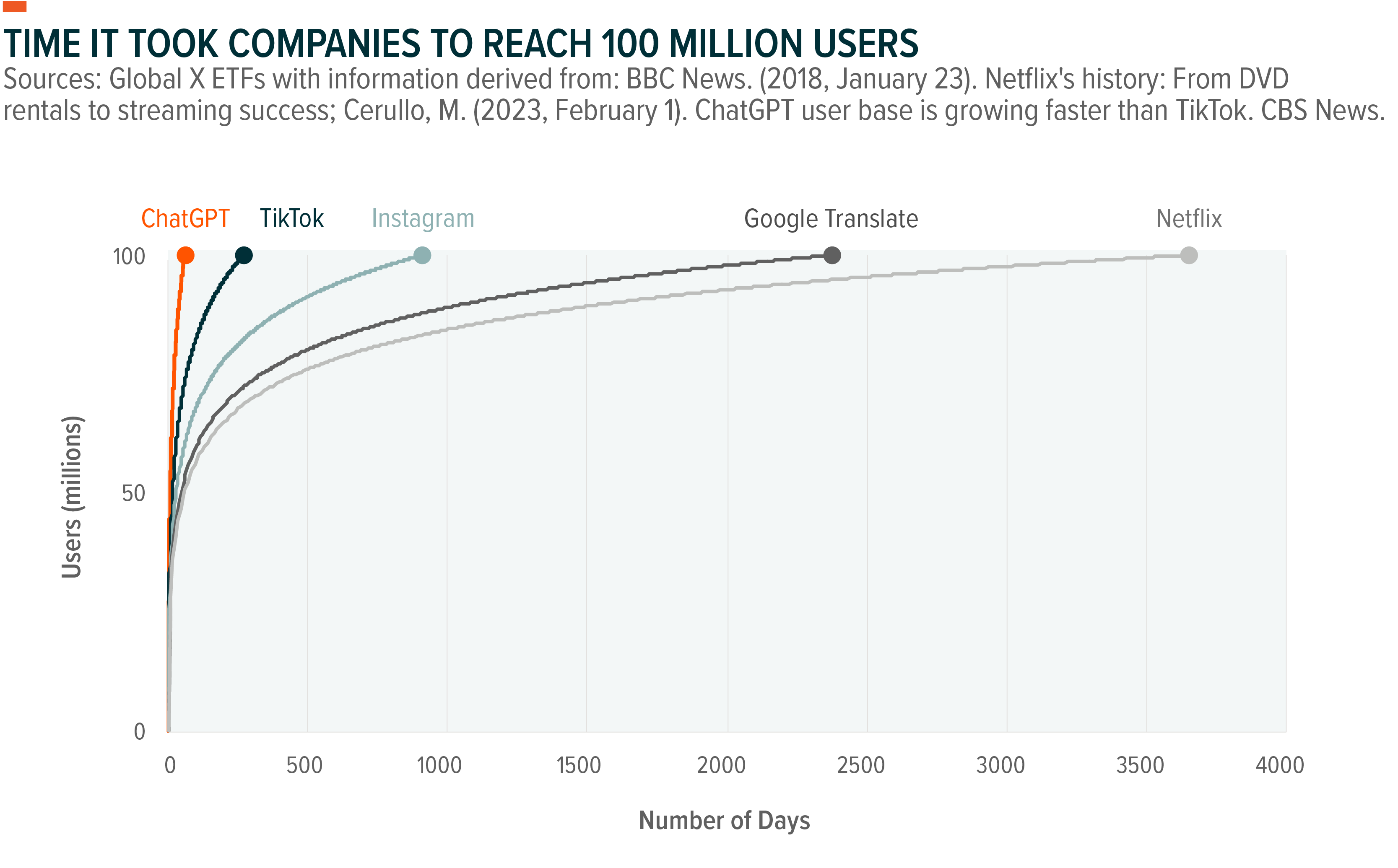

It’s not often we see technologies gain exponential adoption and attention in a very short time frame the same way OpenAI’s ChatGPT has since late 2022. ChatGPT is estimated to have reached 100 million users in just two months.1 It took Netflix 10 years to reach 100 million users; six and half years for Google Translate; roughly two and a half years for Instagram; and about nine months for TikTok.2,3

Generative artificial intelligence (AI) is a rapidly evolving field that has the potential to revolutionize many industries. This powerful technology uses deep learning algorithms to create new and original content, ranging from text and images to music and 3D models. As a result, generative AI has garnered the attention of investors looking to capitalize on its vast potential. In this piece, we will explain generative AI and look at its history.

This piece is part of our Generative AI series of research. To access all our research on the topic, click here.

Key Takeaways

- Generative AI is the most powerful and consumer-friendly AI model to date.

- Google’s Transformer model paved the way for the development of more advanced generative AI models, such as BERT and GPT-3 – the underlying model that ChatGPT is based on.

- Generative AI has a wide range of current and future use cases across various industries, including content creation, virtual customer service, health care, finance, language translation, and gaming.

What Is Generative AI?

Generative AI refers to artificial intelligence systems that are designed to create new and original content based on the data they are trained on. This can include generating text, images, music, and even 3D models. Unlike discriminative AI, which is used to classify and categorize data, generative AI creates new data by using probabilistic models to produce outputs based on patterns it has learned from the input data.

A History of Generative AI: Google’s Transformer Model

Generative AI has a long and fascinating history, but it wasn’t until the development of deep learning algorithms that it became a practical tool for creating new and original content. One of the most significant breakthroughs in this field was Google’s Transformer model, which was introduced in 2017.

The Transformer model was designed to address the limitations of previous sequence-to-sequence models in natural language processing. It introduced a new way of processing sequential data, using self-attention mechanisms to capture the relationships between different elements in a sequence. This allowed the model to better understand and generate text, and it quickly became the state-of-the-art model for natural language processing tasks.

The success of the Transformer model led to the development of many new and improved generative AI models, including BERT and OpenAI’s GPT-3. BERT, or Bidirectional Encoder Representations from Transformers, is a transformer-based model that uses a pre-training approach to learn the relationships between words in a sentence. OpenAI’s GPT-3, on the other hand, is a language model that uses a deep neural network with 175 billion parameters to generate human-like text.4

In the context of generative AI, a parameter is a value that controls the behavior of a machine learning model. Machine learning models are mathematical algorithms that are designed to learn patterns in data and make predictions based on those data. The parameters of a model determine how it processes the data and how it generates predictions.

Parameters play a crucial role in controlling the output of the model. For example, in a text generation model, parameters can control the style, tone, and content of the resulting text. By adjusting the parameters of the model, one can influence the output of the model and generate text that meets specific criteria.

In general, the more parameters a model has, the more accurate and powerful it is. With more parameters, a model can learn a wider range of relationships and patterns in the data. This can lead to better performance and improved accuracy in predictions. However, having too many parameters can also be a drawback. With too many parameters, a model can become overfit, meaning that it is too closely tied to the training data and may not generalize well to new data. Overfitting can lead to poor performance on test data and can limit the model’s ability to make accurate predictions.

Exploring Generative AI’s Use Cases

Generative AI has a wide range of current and future use cases across a variety of industries. Here are some of the most important ones:

- Content creation:Generative AI models are being used to create new and original content in areas such as text, music, art, and video. For example, AI-generated music and art can be used to create new and innovative forms of expression, while AI-generated text can be used to develop news articles, product descriptions, and marketing content.

- Virtual customer service:Basic chatbots have been around for years, but generative AI models can deliver more robust virtual customer service agents to assist customers with increasingly complex inquiries. By using AI to generate responses to customers’ questions, companies can improve the efficiency and quality of their customer service operations.

- Health care:Modern society produces large amounts of health care data, including electronic medical records and imaging data. With the help of generative AI, these data could be used to develop new diagnostic tools, predict disease outcomes, and improve patient care.

- Finance:In finance, generative AI models can be leveraged to form predictions about stock prices, exchange rates, and other financial metrics. This information could be used to make investment decisions and potentially improve financial planning.

- Language translation:Generative AI models have the potential to vastly improve the accuracy and efficiency of language translation services. By using AI to generate translations, companies can improve the quality of their products and services and increase their global reach.

- Gaming:New and innovative game experiences can also be created using generative AI models. For example, AI-generated characters, environments, and storylines can be used to create unique and engaging game experiences.

- Search:The application of generative AI to search has the potential to significantly improve the search experience for users, making it more efficient, personalized, and conversational. Generative AI can understand natural language queries and provide relevant search results, allowing users to ask questions in a conversational format and receive answers in real time.

These are just a few of the many current and future use cases of generative AI. As this technology continues to evolve and improve, it has the potential to revolutionize many industries and have a major impact on the way we live and work. As a guiding framework, we can ask ourselves “Where does text exist today?”, since this is where the technology has the strongest disruptive potential in the near term.

Conclusion: Generative AI Is in Its Infancy and Opportunities Abound

Generative AI has come a long way since its early beginnings, and it continues to evolve at a rapid pace. While the technology has already been incorporated into myriad applications, use cases are likely to expand quickly as it matures. From creating new and original content to revolutionizing industries, generative AI has the potential to shape the future in countless ways. Whether it’s creating new forms of art and expression, improving health care outcomes, or making investment decisions, the possibilities for generative AI are virtually limitless. The outlook for generative AI appears bright, and we expect to see many exciting developments in this field in the years to come.

Related ETFs

AIQ – Global X Artificial Intelligence & Technology ETF

BOTZ – Global X Robotics & Artificial Intelligence ETF

Click the fund name above to view current holdings. Holdings are subject to change. Current and future holdings are subject to risk.